Google Cloud is introducing what it calls its most powerful artificial intelligence infrastructure to date, unveiling a seventh-generation Tensor Processing Unit and expanded Arm-based computing options designed to meet surging demand for AI model deployment — what the company characterizes as a fundamental industry shift from training models to serving them to billions of users.

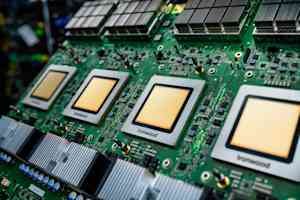

The announcement, made Thursday, centers on Ironwood, Google's latest custom AI accelerator chip, which will become generally available in the coming weeks. In a striking validation of the technology, Anthropic, the AI safety company behind the Claude family of models, disclosed plans to access up to one million of these TPU chips — a commitment worth tens of billions of dollars and among the largest known AI infrastructure deals to date.

The move underscores an intensifying competition among cloud providers to control the infrastructure layer powering artificial intelligence, even as questions mount about whether the industry can sustain its current pace of capital expenditure. Google's approach — building custom silicon rather than relying solely on Nvidia's dominant GPU chips — amounts to a long-term bet that vertical integration from chip design through software will deliver superior economics and performance.

Why companies are racing to serve AI models, not just train them

Google executives framed the announcements around what they call "the age of inference" — a transition point where companies shift resources from training frontier AI models to deploying them in production applications serving millions or billions of requests daily.

"Today's frontier models, including Google's Gemini, Veo, and Imagen and Anthropic's Claude train and serve on Tensor Processing Units," said Amin Vahdat, vice president and general manager of AI and Infrastructure at Google Cloud. "For many organizations, the focus is shifting from training these models to powering useful, responsive interactions with them."

This transition has profound implications for infrastructure requirements. Where training workloads can often tolerate batch processing and longer completion times, inference — the process of actually running a trained model to generate responses — demands consistently low latency, high throughput, and unwavering reliability. A chatbot that takes 30 seconds to respond, or a coding assistant that frequently times out, becomes unusable regardless of the underlying model's capabilities.

Agentic workflows — where AI systems take autonomous actions rather than simply responding to prompts — create particularly complex infrastructure challenges, requiring tight coordination between specialized AI accelerators and general-purpose computing.

Inside Ironwood's architecture: 9,216 chips working as one supercomputer

Ironwood is more than incremental improvement over Google's sixth-generation TPUs. According to technical specifications shared by the company, it delivers more than four times better performance for both training and inference workloads compared to its predecessor — gains that Google attributes to a system-level co-design approach rather than simply increasing transistor counts.

The architecture's most striking feature is its scale. A single Ironwood "pod" — a tightly integrated unit of TPU chips functioning as one supercomputer — can connect up to 9,216 individual chips through Google's proprietary Inter-Chip Interconnect network operating at 9.6 terabits per second. To put that bandwidth in perspective, it's roughly equivalent to downloading the entire Library of Congress in under two seconds.

This massive interconnect fabric allows the 9,216 chips to share access to 1.77 petabytes of High Bandwidth Memory — memory fast enough to keep pace with the chips' processing speeds. That's approximately 40,000 high-definition Blu-ray movies' worth of working memory, instantly accessible by thousands of processors simultaneously. "For context, that means Ironwood Pods can deliver 118x more FP8 ExaFLOPS versus the next closest competitor," Google stated in technical documentation.

The system employs Optical Circuit Switching technology that acts as a "dynamic, reconfigurable fabric." When individual components fail or require maintenance — inevitable at this scale — the OCS technology automatically reroutes data traffic around the interruption within milliseconds, allowing workloads to continue running without user-visible disruption.

This reliability focus reflects lessons learned from deploying five previous TPU generations. Google reported that its fleet-wide uptime for liquid-cooled systems has maintained approximately 99.999% availability since 2020 — equivalent to less than six minutes of downtime per year.

Anthropic's billion-dollar bet validates Google's custom silicon strategy

Perhaps the most significant external validation of Ironwood's capabilities comes from Anthropic's commitment to access up to one million TPU chips — a staggering figure in an industry where even clusters of 10,000 to 50,000 accelerators are considered massive.

"Anthropic and Google have a longstanding partnership and this latest expansion will help us continue to grow the compute we need to define the frontier of AI," said Krishna Rao, Anthropic's chief financial officer, in the official partnership agreement. "Our customers — from Fortune 500 companies to AI-native startups — depend on Claude for their most important work, and this expanded capacity ensures we can meet our exponentially growing demand."

According to a separate statement, Anthropic will have access to "well over a gigawatt of capacity coming online in 2026" — enough electricity to power a small city. The company specifically cited TPUs' "price-performance and efficiency" as key factors in the decision, along with "existing experience in training and serving its models with TPUs."

Industry analysts estimate that a commitment to access one million TPU chips, with associated infrastructure, networking, power, and cooling, likely represents a multi-year contract worth tens of billions of dollars — among the largest known cloud infrastructure commitments in history.

James Bradbury, Anthropic's head of compute, elaborated on the inference focus: "Ironwood's improvements in both inference performance and training scalability will help us scale efficiently while maintaining the speed and reliability our customers expect."

Google's Axion processors target the computing workloads that make AI possible

Alongside Ironwood, Google introduced expanded options for its Axion processor family — custom Arm-based CPUs designed for general-purpose workloads that support AI applications but don't require specialized accelerators.

The N4A instance type, now entering preview, targets what Google describes as "microservices, containerized applications, open-source databases, batch, data analytics, development environments, experimentation, data preparation and web serving jobs that make AI applications possible." The company claims N4A delivers up to 2X better price-performance than comparable current-generation x86-based virtual machines.

Google is also previewing C4A metal, its first bare-metal Arm instance, which provides dedicated physical servers for specialized workloads such as Android development, automotive systems, and software with strict licensing requirements.

The Axion strategy reflects a growing conviction that the future of computing infrastructure requires both specialized AI accelerators and highly efficient general-purpose processors. While a TPU handles the computationally intensive task of running an AI model, Axion-class processors manage data ingestion, preprocessing, application logic, API serving, and countless other tasks in a modern AI application stack.

Early customer results suggest the approach delivers measurable economic benefits. Vimeo reported observing "a 30% improvement in performance for our core transcoding workload compared to comparable x86 VMs" in initial N4A tests. ZoomInfo measured "a 60% improvement in price-performance" for data processing pipelines running on Java services, according to Sergei Koren, the company's chief infrastructure architect.

Software tools turn raw silicon performance into developer productivity

Hardware performance means little if developers cannot easily harness it. Google emphasized that Ironwood and Axion are integrated into what it calls AI Hypercomputer — "an integrated supercomputing system that brings together compute, networking, storage, and software to improve system-level performance and efficiency."

According to an October 2025 IDC Business Value Snapshot study, AI Hypercomputer customers achieved on average 353% three-year return on investment, 28% lower IT costs, and 55% more efficient IT teams.

Google disclosed several software enhancements designed to maximize Ironwood utilization. Google Kubernetes Engine now offers advanced maintenance and topology awareness for TPU clusters, enabling intelligent scheduling and highly resilient deployments. The company's open-source MaxText framework now supports advanced training techniques including Supervised Fine-Tuning and Generative Reinforcement Policy Optimization.

Perhaps most significant for production deployments, Google's Inference Gateway intelligently load-balances requests across model servers to optimize critical metrics. According to Google, it can reduce time-to-first-token latency by 96% and serving costs by up to 30% through techniques like prefix-cache-aware routing.

The Inference Gateway monitors key metrics including KV cache hits, GPU or TPU utilization, and request queue length, then routes incoming requests to the optimal replica. For conversational AI applications where multiple requests might share context, routing requests with shared prefixes to the same server instance can dramatically reduce redundant computation.

The hidden challenge: powering and cooling one-megawatt server racks

Behind these announcements lies a massive physical infrastructure challenge that Google addressed at the recent Open Compute Project EMEA Summit. The company disclosed that it's implementing +/-400 volt direct current power delivery capable of supporting up to one megawatt per rack — a tenfold increase from typical deployments.

"The AI era requires even greater power delivery capabilities," explained Madhusudan Iyengar and Amber Huffman, Google principal engineers, in an April 2025 blog post. "ML will require more than 500 kW per IT rack before 2030."

Google is collaborating with Meta and Microsoft to standardize electrical and mechanical interfaces for high-voltage DC distribution. The company selected 400 VDC specifically to leverage the supply chain established by electric vehicles, "for greater economies of scale, more efficient manufacturing, and improved quality and scale."

On cooling, Google revealed it will contribute its fifth-generation cooling distribution unit design to the Open Compute Project. The company has deployed liquid cooling "at GigaWatt scale across more than 2,000 TPU Pods in the past seven years" with fleet-wide availability of approximately 99.999%.

Water can transport approximately 4,000 times more heat per unit volume than air for a given temperature change — critical as individual AI accelerator chips increasingly dissipate 1,000 watts or more.

Custom silicon gambit challenges Nvidia's AI accelerator dominance

Google's announcements come as the AI infrastructure market reaches an inflection point. While Nvidia maintains overwhelming dominance in AI accelerators — holding an estimated 80-95% market share — cloud providers are increasingly investing in custom silicon to differentiate their offerings and improve unit economics.

Amazon Web Services pioneered this approach with Graviton Arm-based CPUs and Inferentia / Trainium AI chips. Microsoft has developed Cobalt processors and is reportedly working on AI accelerators. Google now offers the most comprehensive custom silicon portfolio among major cloud providers.

The strategy faces inherent challenges. Custom chip development requires enormous upfront investment — often billions of dollars. The software ecosystem for specialized accelerators lags behind Nvidia's CUDA platform, which benefits from 15+ years of developer tools. And rapid AI model architecture evolution creates risk that custom silicon optimized for today's models becomes less relevant as new techniques emerge.

Yet Google argues its approach delivers unique advantages. "This is how we built the first TPU ten years ago, which in turn unlocked the invention of the Transformer eight years ago — the very architecture that powers most of modern AI," the company noted, referring to the seminal "Attention Is All You Need" paper from Google researchers in 2017.

The argument is that tight integration — "model research, software, and hardware development under one roof" — enables optimizations impossible with off-the-shelf components.

Beyond Anthropic, several other customers provided early feedback. Lightricks, which develops creative AI tools, reported that early Ironwood testing "makes us highly enthusiastic" about creating "more nuanced, precise, and higher-fidelity image and video generation for our millions of global customers," said Yoav HaCohen, the company's research director.

Google's announcements raise questions that will play out over coming quarters. Can the industry sustain current infrastructure spending, with major AI companies collectively committing hundreds of billions of dollars? Will custom silicon prove economically superior to Nvidia GPUs? How will model architectures evolve?

For now, Google appears committed to a strategy that has defined the company for decades: building custom infrastructure to enable applications impossible on commodity hardware, then making that infrastructure available to customers who want similar capabilities without the capital investment.

As the AI industry transitions from research labs to production deployments serving billions of users, that infrastructure layer — the silicon, software, networking, power, and cooling that make it all run — may prove as important as the models themselves.

And if Anthropic's willingness to commit to accessing up to one million chips is any indication, Google's bet on custom silicon designed specifically for the age of inference may be paying off just as demand reaches its inflection point.